Apple has recently announced new child safety protections that are coming this fall with the introduction of iOS 15, iPadOS 15, and macOS Monterey.

We'll take a closer look at these expanded child safety features and the technology behind them below.

Child Sexual Abuse Material Scanning

The most notable change is that Apple will start using new technology to detect images depicting images of child abuse stored in iCloud Photos.

These images are known as Child Sexual Abuse Material, or CSAM, and Apple will report instances of them to the National Center for Missing and Exploited Children. The NCMEC is a reporting center for CSAM and works with law enforcement agencies.

Apple's CSAM scanning will be limited to the United States at launch.

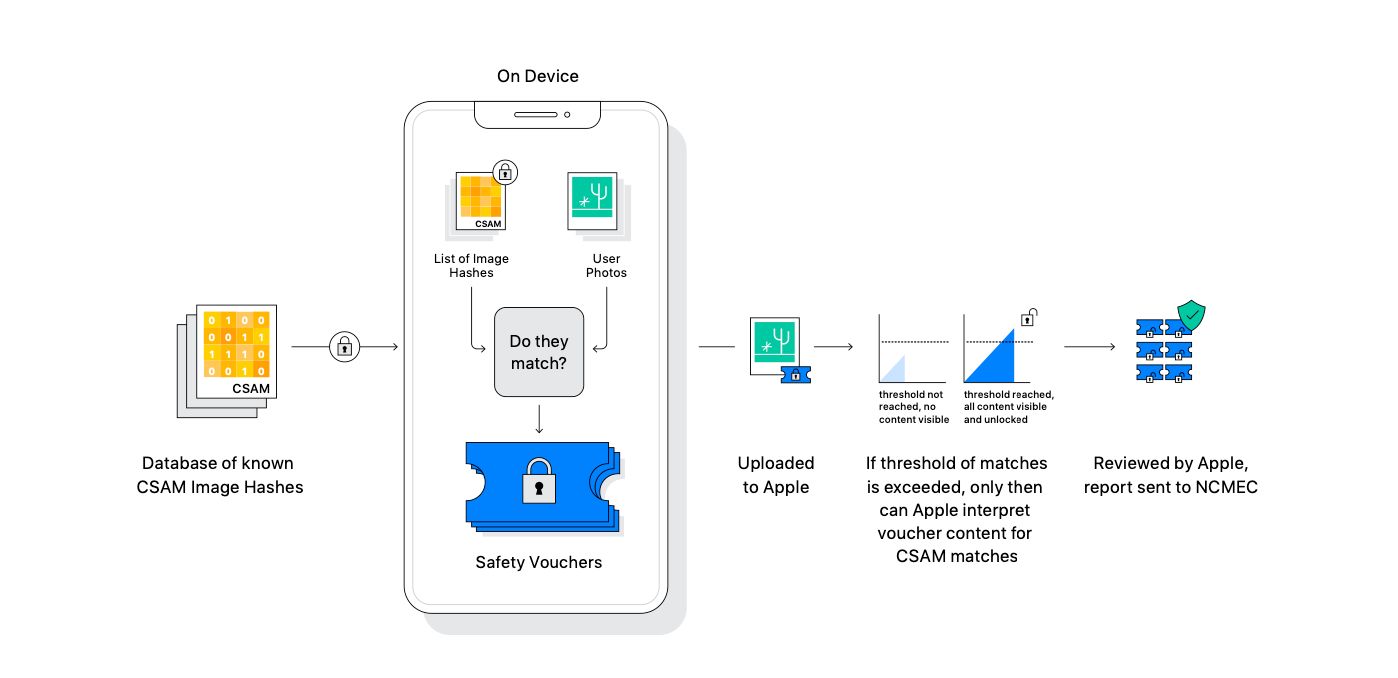

Apple says the system uses cryptography and was designed with privacy in mind. Images are scanned on-device before being uploaded to iCloud Photos.

According to Apple, there's no need to worry about Apple employees seeing your actual photos. Instead, the NCMEC provides Apple with image hashes of CSAM images. A hash takes an image and returns a long, unique string of letters and numbers.

Apple takes those hashes and transforms the data into an unreadable set of hashes stored securely on a device.

Before the image is synced to iCloud Photos, it is checked against the CSAM images. With a special cryptographic technology—private set intersection—the system determines if there is a match without revealing a result.

If there is a match, a device creates a cryptographic safety voucher that encodes the match along with more encrypted data about the image. That voucher is uploaded to iCloud Photos with the image.

Unless an iCloud Photos account crosses a specific threshold of CSAM content, the system ensures that the safety vouchers can't be read by Apple. That is thanks to a cryptographic technology called secret sharing.

According to Apple, the unknown threshold provides a high amount of accuracy and ensures less than a one in one trillion chance of incorrectly flagging an account.

When the threshold is exceeded, the technology will allow Apple to interpret the vouchers and matching CSAM images. Apple will then manually review each report to confirm a match. If confirmed, Apple will disable a user's account and then send a report to the NCMEC.

There will be an appeal process for reinstatement if a user feels that their account has been mistakenly flagged by the technology.

If you have privacy concerns with the new system, Apple has confirmed that no photos will be scanned using the cryptography technology if you disable iCloud Photos. You can do that by heading to Settings > [Your Name] > iCloud > Photos.

There are a few downsides when turning off iCloud Photos. All the photos and videos will be stored on your device. That might cause problems if you have a lot of images and videos and an older iPhone with limited storage.

Also, photos and videos captured on the device won’t be accessible on other Apple devices using the iCloud account.

Apple explains more about the technology being used in the CSAM detection in a white paper PDF. You can also read an Apple FAQ with additional information about the system.

In the FAQ, Apple notes that the CSAM detection system can't be used to detect anything other than CSAM. The company also says that in the United States, and many other countries, possession of the CSAM images is a crime and that Apple is obligated to inform authorities.

The company also says that it will refuse any government demands to add a non-CSAM image to the hash list. It also explains why non-CSAM images couldn't be added to the system by a third party.

Because of human review and the fact that the hashes used are from known and existing CSAM images, Apple says that the system was designed to be accurate and avoid issues with other images or innocent users being reported to the NCMEC.

Additional Communication Safety Protocol in Messages

Another new feature will be added safety protocols in the Messages app. This offers tools that will warn children and their parents when sending or receiving messages with sexually explicit photos.

When one of these messages is received, the photo will be blurred and the child will also be warned. They can see helpful resources and are told that it is okay if they do not view the image.

The feature will only be for accounts set up as families in iCloud. Parents or guardians will need to opt in to enable the communication safety feature. They can also choose to be notified when a child of 12 or younger sends or receives a sexually explicit image.

For children aged 13 to 17, parents are not notified. But the child will be warned and asked if they want to view or share a sexually explicit image.

Messages uses on-device machine learning to determine whether an attachment or image is sexually explicit. Apple will not receive any access to the messages or the image content.

The feature will work for both regular SMS and iMessage messages and is not linked to the CSAM scanning feature we detailed above.

Expanded Safety Guidance in Siri and Search

Finally, Apple will expand guidance for both Siri and Search features to help children and parents stay safe online and receive help in unsafe situations. Apple pointed to an example where users who ask Siri how they can report CSAM or child exploitation will be provided resources on how to file a report with authorities.

Updates will arrive to Siri and Search for when anyone performs search queries related to CSAM. An intervention will explain to users that interest in the topic is harmful and problematic. They will also show resources and partners to assist in getting help with the issue.

More Changes Coming With Apple's Latest Software

Developed in conjunction with safety experts, the three new features from Apple are designed to help keep children safe online. Even though the features might cause concern in some privacy-focused circles, Apple has been forthcoming about the technology and how it will balance privacy concerns with child protection.

![How to Find IMSI Number on iPhone [Helps with iOS Unlock][Updated] data:post.title](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjLjHwhnfUXNJTuiylqmlurhLRVAEVi803j6xcnvN8EZwF5_XUynz1y0Ko-vwpx6O3nT5hogTELahedGzgQpXM5Y99fcBliinyBu8ACw8_DVV3FpPLkIqR0u7v_HM39rAkpV5MyJiG1h5s/s72-c/find+imsi+iphone.jpg)