Computers have become essential parts of our modern lives. We use them for work, school, shopping, entertainment, and almost everything else.

But where did it all start for these groundbreaking devices, and what does the future hold for them? Here's how the computer has changed over time.

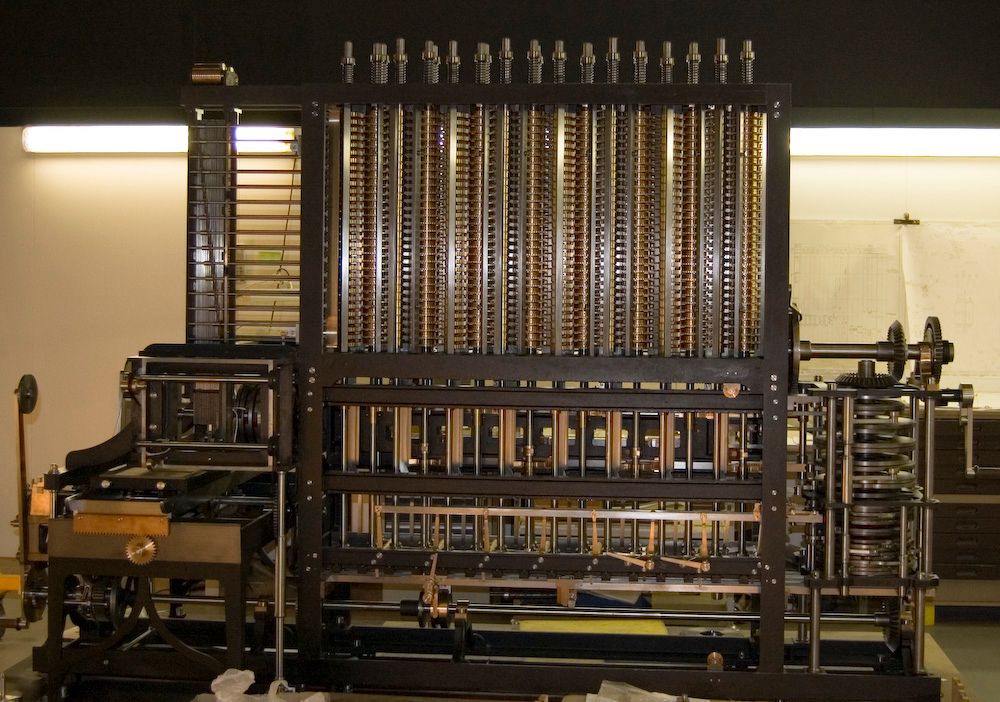

The First Automatic Computing Engine Didn't Look Like You'd Expect

The first automatic computing engines were invented in the 19th century and called the Babbage Difference Engine. However, it was conceptualized beforehand by Johann Helfrich von Müller.

Müller was a German engineer who sketched a proposed structure for this computer on paper in the late 18th century. Unfortunately for him, though, technology hadn't yet reached the point where he could build the device himself.

The Babbage Difference Engine was huge, weighing around five tons. It was designed to solve complex mathematical problems that took a long time to solve manually.

Some argue that the Electronic Numerical Integrator And Computer (ENIAC) was the first actual computer. Over a century after the invention of the Babbage Difference Machine, John Mauchly invented this device. He quickly filed for a patent, allowing him to take credit for the first computer.

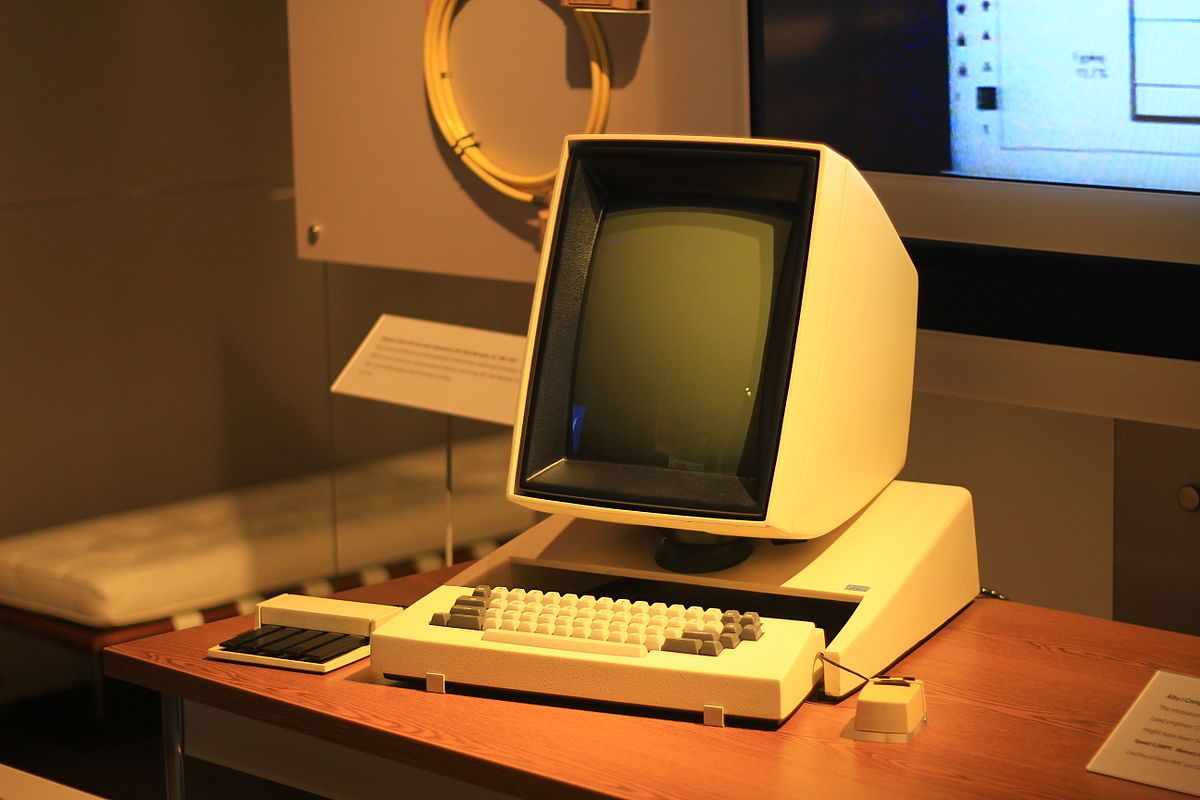

The First Computer Monitor Was Created in 1973

Neither the Babbage Machine nor the ENIAC looks anything like the computers we use today. The first computer with a monitor was invented in 1973 and was called the Xerox Alto.

The Xerox Alto was developed by PARC, an American company, as a research system. Its user-friendly features added to its groundbreaking stance in the electronics industry. Even a child could operate this computer, which was pretty much impossible for the Babbage Difference Engine or ENIAC.

The Alto paved the way for modern-day Graphic User Interfaces (GUIs) with its easy-to-use graphics software. The Alto's screen used a bitmap display, a rudimentary computer output device, but pretty impressive for the 1970s. It even had its own mouse, though it looked rather different to the ones we use today.

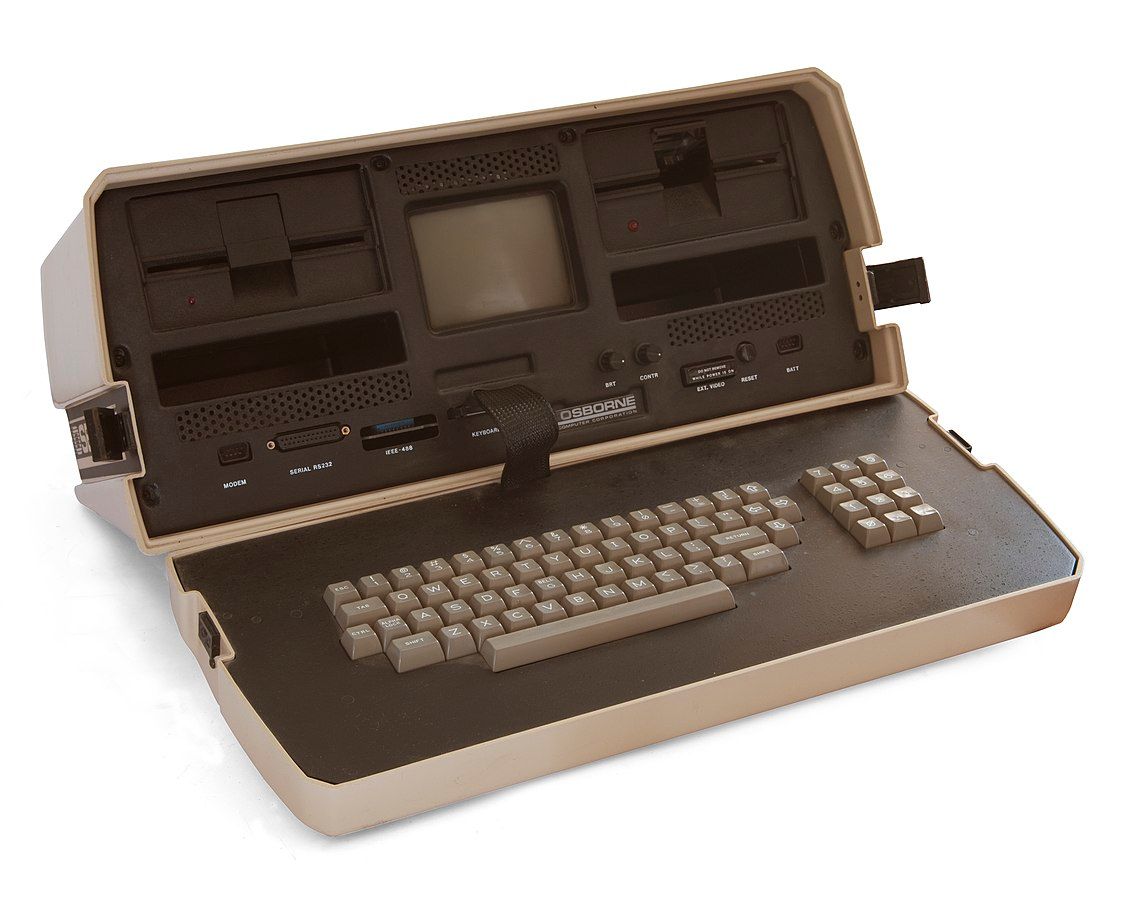

The First Publicly Available Laptop Came Just Nine Years After the First Monitor

It doesn't really look much like a laptop, does it?

This device is called the Osborne 1 and was the first laptop ever made available to the public. It was created by Adam Osborne, a British author and software publisher, in 1981. This laptop had its own display monitor, like the Xerox Alto, but it was only five inches wide. It had 64K random access memory (RAM), 4K read-only memory (ROM), and two floppy disk drives.

While this laptop certainly caused a lot of discussion in the industry upon its release, it wasn't exactly convenient as a portable computer. Initially, the Osborne 1 didn't have a battery. Therefore, you needed to plug it into an outlet at all times during use. Some years after its initial release, developers began including a battery in the laptop. However, this only provided one hour of wireless usage.

Not many people could afford the Osborne 1 when it was first released. The original price of the Osborne 1 in 1981 was $1,795. This is a shockingly high price point, given that standard laptops today can cost anywhere between $800 and $2,000—and have several more features. But considering that the Osborne 1 was the first ever laptop you could buy, can we really be mad at the price?

Modern Computers Have Pushed Boundaries We Could Never Have Previously Imagined

The computer has come an incredibly long way over the 21st century. We've seen drastic improvements to picture quality, memory storage, and battery life—plus several other elements. But what does the future hold for computers?

There are many things that people want to see in future computers: all-day battery life, even faster processing speeds, and even better graphics. Well, tech companies are already looking into improving these aspects. Lenovo has already released a laptop with a dual-display feature. Meanwhile, some computers now on the market—such as the Dell XPS 13 and the HP Spectre x360—have incredible 4K displays.

But more than anything, the quantum computer is now taking the stage as the future of computing. Quantum computers are pretty different from those you'll find in a store. These machines use the properties of quantum physics to perform operations. The key difference between traditional and quantum computers is the way they store data. Conventional computers use bits, whereas quantum computers use quantum bits—or "qubits".

Quantum computers can therefore generate solutions to huge problems with the help of complex learning algorithms. They can also consider and process multiple outcomes and combinations simultaneously, making them super-fast in their operation. The exciting part of this technology is that, in ten years, these computers could even provide solutions to substantial global crises, similar to the COVID-19 pandemic and climate change. Such capabilities would potentially make quantum computers life-saving, which would be a huge technological step for humanity.

There’s No Knowing What Computers Could End Up Doing, but It’s Incredible to Think About

With technology advancing so massively from decade to decade, one can only imagine what our computers will be able to do in 30 or 40 years.

With our home computers' current capabilities and the promising possibilities of quantum computers, we can only assume that computers will continue to change the world even more than they already have.